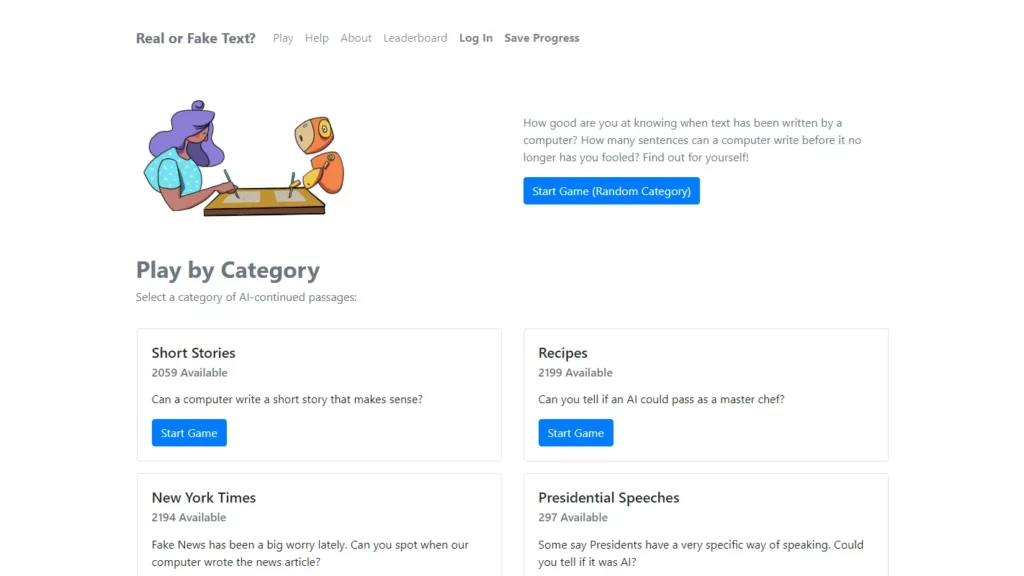

Roft is an interactive platform designed to test users’ ability to distinguish between human-written and machine-generated text. It offers various categories of AI-continued passages, such as short stories, fake news articles, master chef recipes, and presidential speeches, for users to determine when the text transitions from human-written to machine-generated. aims to measure how good neural language models are at writing text by inviting users to play a game where they decide whether each sentence is written by a computer or a human, providing explanations for their decisions, and receiving points based on their precision. Users can track their progress, view statistics, and participate in leaderboards to encourage sustained participation.

⚡Top 5 RoFT Features:

- Game-based Interface: The RoFT website presents its tasks as games, making the process engaging and interactive.

- Multiple Text Domains: The platform offers a variety of text domains, including news and fictional stories, to test the ability to detect machine-generated text.

- Leaderboard System: Users can compete with each other on the leaderboard, encouraging them to participate more actively in annotating tasks.

- Detailed Metadata Collection: RoFT collects detailed metadata for each annotation, enabling researchers to analyze various aspects of human detection performance.

- Gamification Elements: plans to add further gamification elements such as leaderboards broken down by text domain, comprehensive statistics on user progress and skill, and the ability to see and upvote free-text comments of other users.

⚡Top 5 RoFT Use Cases:

- Evaluating AI Performance: RoFT is used to evaluate the performance of artificial intelligence models in generating human-like text.

- Training Human Detectors: The platform aims to train humans to better detect generated text, improving their understanding of what makes text sound human.

- Comparing Model Performance: Researchers can use RoFT to compare the performance of different language models across various genres and prompts.

- Analyzing Visibility of Errors: The dataset collected through RoFT can be used to analyze the visibility of model errors and improve automatic error correction systems.

- Assessing Detection Skills: Individuals can test their skills at detecting machine-generated text using the game interface provided by RoFT.