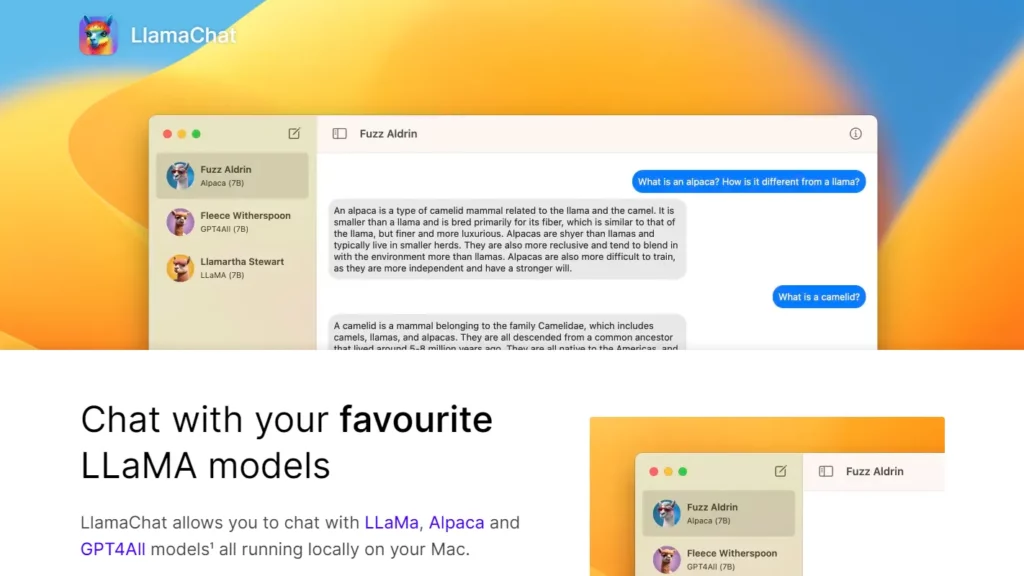

LlamaChat provides a local application for chatting with various large language models such as LLaMA, Alpaca, and GPT4All. It allows users to run these models locally on their Mac computers without requiring internet connectivity. Additionally, it supports importing raw published PyTorch model checkpoints or pre-converted `ml` model files. LlamaChat is fully open-source and free to use, built on open-source libraries like llama.cpp and llama.swift. Users can download the app for macOS 13 Ventura, which requires either an Intel or Apple Silicon processor.

⚡Top 5 LlamaChat Features:

- State-of-the-art Large Language Model for Coding: It can generate code and natural language about code from both code and natural language prompts.

- Open Platform: This platform offers developers, researchers, and businesses access to AI models, tools, and resources.

- Democratizing Access: Licenses its models and tools for both research and commercial use, promoting openness and collaboration.

- Empowering Developers: Provides tools like Code Llama, which generates code and related text, and Purple Llama, which focuses on trust and safety.

- Prompt Engineering Course: Offers a free course on Deeplearning AI to teach effective usage of Llama 2 models.

⚡Top 5 LlamaChat Use Cases:

- Chat with LLaMA Models: Engage in conversations with various models, such as LLaMA, Alpaca, and GPT4All, locally on your Mac.

- Convert Models Easily: Import raw published PyTorch model checkpoints or ml model files into LlamaChat for seamless conversion.

- Fully Open-Source: Leverage open-source libraries like llama.cpp and llama.swift to develop applications with Llama models.

- Support for Multiple Models: LlamaChat currently supports LLaMA, Alpaca, and GPT4All models, with plans to add support for Vicuna and Koala.

- Customizable Personality: Customize the behavior of Llama models by adjusting their personalities through user interface options.