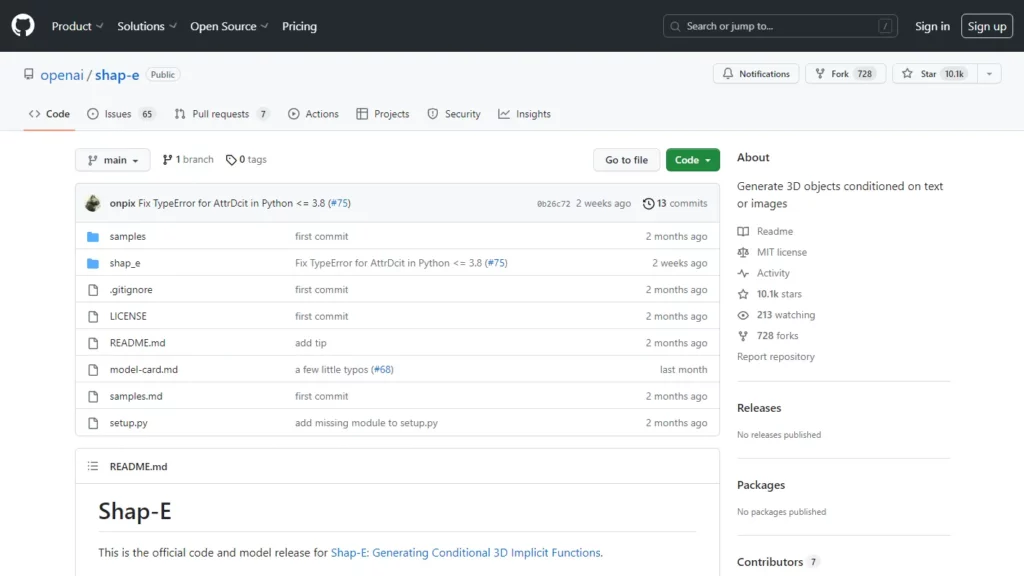

Shap-E is a system that generates 3D objects conditioned on text or images. Shap-E uses a diffusion process to generate a 3D image from a text prompt, and it was introduced in the paper “Shap-E: Generating Conditional 3D Implicit Functions” by Heewoo Jun and Alex Nichol from OpenAI. The repository includes guidance on how to use the code, examples of what the text-conditional model can generate, and instructions for installation and usage. Users can install the package with `pip install -e .` and find examples in notebooks like `sample_text_to_3d.ipynb` and `sample_image_to_3d.ipynb`.

⚡Top 5 Shap-E Features:

- Text-conditional model: Easily generate 3D objects conditioned on text prompts, allowing users to create 3D models based on text descriptions.

- Image-conditional model: Shap-E can also generate 3D objects conditioned on synthetic view images, which can be used to create 3D models based on 2D images.

- Implicit function generation: Generate the parameters of implicit functions that can be rendered as both textured meshes and neural radiance fields, providing flexibility in rendering options.

- Encoder and diffusion model: Use an encoder to map 3D assets into the parameters of an implicit function, followed by a conditional diffusion model to generate the final 3D model.

- Fast convergence and sample quality: Shap-E converges faster than Point-E, an explicit generative model over point clouds, and achieves comparable or better sample quality despite modeling a higher-dimensional, multi-representation output space.

⚡Top 5 Shap-E Use Cases:

- Text-to-3D model: This tool generates 3D models based on text prompts, which can be useful in various applications such as architectural design, product visualization, and game development.

- Image-to-3D model: This tool converts 2D images into 3D models, which can be used for tasks like object recognition, 3D reconstruction, and virtual reality content creation.

- Conditional rendering: Generating 3D models conditioned on text or images allows for the creation of custom 3D assets tailored to specific needs or preferences.

- Multi-representation output space: Shap-E’s support for both textured meshes and neural radiance fields enables users to choose the most suitable rendering method for their application.

- Fast training and inference: Fast convergence and sample quality make it a practical choice for generating 3D models in a variety of domains, including art, engineering, and entertainment.